TL;DR: As weird as it might sound, seeing a few false positives

reported by a security scanner is probably a good sign and

certainly better than seeing none. Let’s explain why.

Introduction

False positives have made a somewhat unexpected appearance in

our lives in recent years. I am, of course, referring to the

COVID-19 pandemic, which required massive testing campaigns in

order to control the spread of the virus. For the record, a false

positive is a result that appears positive (for COVID-19 in our

case), where it is actually negative (the person is not infected).

More commonly, we speak of false alarms.

In computer security, we are also often confronted with false

positives. Ask the security team behind any SIEM what their biggest

operational challenge is, and chances are that false positives will

be mentioned. A recent report[1]

estimates that as much as 20% of all the alerts received by

security professionals are false positives, making it a big source

of fatigue.

Yet the story behind false positives is not as simple as it

might appear at first. In this article, we will advocate that when

evaluating an analysis tool, seeing a moderate rate of false

positives is a rather good sign of efficiency.

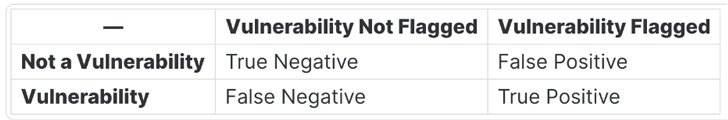

What are we talking about exactly?

With static analysis[2]

in application security, our primary concern is to catch all the

true vulnerabilities by analyzing source code.

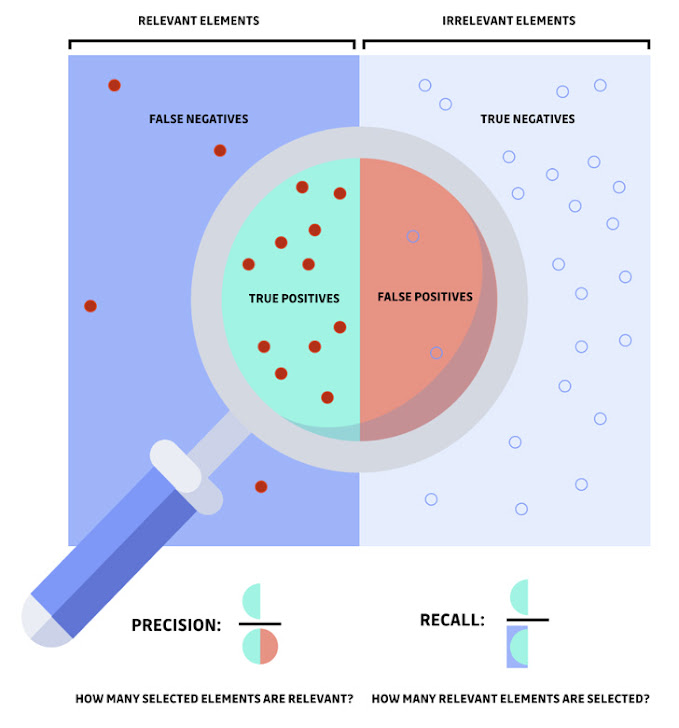

Here is a visualization to better grasp the distinction between

two fundamental concepts of static analysis: precision and recall.

The magnifying glass represents the sample that was identified or

selected by the detection tool. You can learn more about how to

assess the performance of a statistical process here[3].

Let’s see what that means from an engineering point of view:

- by reducing false positives, we improve precision (all

vulnerabilities detected actually represent a security issue). - by reducing false negatives, we improve recall (all

vulnerabilities present are correctly identified). - at 100% recall, the detection tool would never miss a

vulnerability. - at 100% precision, the detection tool would never raise a false

alert.

Put another way, a vulnerability scanner’s objective is to fit

the circle (in the magnifying glass) as close as possible to the

left rectangle (relevant elements).

The problem is that the answer is rarely clear-cut, meaning

trade-offs are to be made.

So, what is more desirable: maximizing precision or recall?

Which one is worse, too many false positives or too many false

negatives?

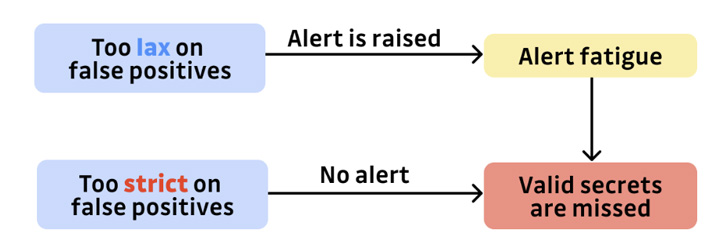

To understand why, let’s take it to both extremes: imagine that

a detection tool only alerts its users when the probability that a

given piece of code contains a vulnerability is superior to

99.999%. With such a high threshold, you can be almost certain that

an alert is indeed a true positive. But how many security problems

are going to go unnoticed because of the scanner selectiveness? A

lot.

Now, on the contrary, what would happen if the tool was tuned to

never miss a vulnerability (maximize the recall)? You guessed it:

you would soon be faced with hundreds or even thousands of false

alerts. And there lies a greater danger.

As Aesop warned us in his fable The Boy Who Cried Wolf[4], anyone who just repeats

false claims will end up not being listened to. In our modern

world, the disbelief would materialize as a simple click to

deactivate the security notifications and restore peacefulness, or

just ignore them if deactivation isn’t allowed. But the

consequences could be at least as dramatic as there are in the

fable.

It’s fair to say that alert fatigue is probably the number one

reason static analysis fails so often. Not only are false alarms

the source of failure of entire application security programs, but

they also cause much more serious damages, such as burnout and

turnout.

And yet, despite all the evils attributed to them, you would be

mistaken to think that if a tool does not carry any false

positives, then it must bring the definitive answer to this

problem.

How to learn to accept false positives

To accept false positives, we have to go against that basic

instinct that often pushes us towards early conclusions. Another

thought experiment can help us illustrate this.

Imagine that you are tasked with comparing the performance of

two security scanners A and B.

After running both tools on your benchmark, the results are the

following: scanner A only detected valid vulnerabilities,

while scanner B reported both valid and invalid

vulnerabilities. At this point, who wouldn’t be tempted to

draw an early conclusion? You’d have to be a wise enough observer

to ask for more data before deciding. The data would most probably

reveal that some valid secrets reported by B had been

silently ignored by A.

You can now see the basic idea behind this article: any tool,

process, or company claiming that they are completely free

from false positives should sound suspicious. If that were truly

the case, chances would be very high that some relevant elements

were silently skipped.

Finding the balance between precision and recall is a subtle

matter and requires a lot of tuning efforts (you can read how

GitGuardian engineers are improving the model precision[5]). Not only that, but it

is also absolutely normal to see it occasionally fail. That’s why

you should be more worried about no false positives than a seeing

few ones.

But there is also another reason why false positives might in

fact be an interesting signal too: security is never “all white or

all black”. There is always a margin where “we don’t know”, and

where human scrutiny and triage become essential.

“Due to the nature of the software we write, sometimes we

get false positives. When that happens, our developers can fill out

a form and say, “Hey, this is a false positive. This is part of a

test case. You can ignore this.” — Source[6].

There lies a deeper truth: security is never “all white or all

black”. There is always a margin where “we don’t know”, and where

human scrutiny and triage becomes essential. In other words, it is

not just about raw numbers, it is also about how they will

be used. False positives are useful from that perspective: they

help improve the tools and refine algorithms so that context is

better understood and considered. But like an asymptote, the

absolute 0 can never be reached.

There is one necessary condition to transform what seems like a

curse into a virtuous circle though. You have to make sure that

false positives can be flagged and incorporated in the detection

algorithm as easily as possible for end-users. One of the most

common ways to achieve that is to simply offer the possibility to

exclude files, directories, or repositories from the scanned

perimeter.

At GitGuardian, we are specialized in secrets detection[7]. We pushed the idea to

enhance any finding with as much context as possible, leading to

much faster feedback cycles and alleviating as much work as

possible.

If a developer tries to commit a secret with the client-side

ggshield[8]

installed as a pre-commit hook, the commit will be stopped unless

the developer flags it as a secret to ignore[9]. From there, the secret

is considered a false positive, and won’t trigger an alert anymore,

but only on his local workstation. Only a security team member with

access to the GitGuardian dashboard is able to flag a false

positive for the entire team (global ignore).

If a leaked secret is reported, we provide tools to help the

security team quickly dispatch them. For example, the auto-healing playbook[10] automatically sends a

mail to the developer who committed the secret. Depending on the

playbook configuration, developers can be allowed to resolve or

ignore the incident themselves, lightening the amount of work left

to the security team.

These are just a few examples of how we learned to tailor the

detection and remediation processes around false positives, rather

than obsessing about eliminating them. In statistics, this

obsession even has a name: it’s called overfitting, and it means

that your model is too dependent on a particular set of data.

Lacking real-world inputs, the model wouldn’t be useful in a

production setting.

Conclusion

False positives cause alert fatigue and derail security programs

so often that they are now widely considered pure evil. It is true

that when considering a detection tool, you want the best precision

possible, and having too many false positives causes more problems

than not using any tool in the first place. That being said, never

overlook the recall rate.

At GitGuardian, we designed a wide arsenal of generic detection

filters[11] to improve our secrets

detection engine’s recall rate.

From a purely statistical perspective, having a low rate of

false positives is a rather good sign, meaning that few defects

pass through the netting.

When in control, false positives are not

that bad. They can even be used to your advantage since

they indicate where improvements can be made, both on the analysis

side or on the remediation side.

Understanding why something was considered “valid” by the system

and having a way to adapt to it is key to improving your

application security. We are also convinced it is one of the areas

where the collaboration between security and development teams

really shines.

As a final note, remember: if a detection tool does not report

any false positives, run. You are in for big trouble.

Note — This article is written and contributed by Thomas

Segura, technical content writer at GitGuardian.

References

- ^

report

(orca.security) - ^

static

analysis (blog.gitguardian.com) - ^

here

(blog.gitguardian.com) - ^

The Boy

Who Cried Wolf (en.wikipedia.org) - ^

model

precision (blog.gitguardian.com) - ^

Source

(www.itcentralstation.com) - ^

secrets

detection (www.gitguardian.com) - ^

ggshield

(github.com) - ^

flags it

as a secret to ignore

(docs.gitguardian.com) - ^

auto-healing playbook

(docs.gitguardian.com) - ^

generic detection filters

(blog.gitguardian.com)

Read more https://thehackernews.com/2022/08/the-truth-about-false-positives-in.html